Although the details obviously differ, all 3G and 4G mobile wireless data solutions share a common goal: drive data as fast as it can go, but no faster. In other words, these systems are designed to take into account the current RF conditions: the better the radio link, the higher the data rate that can be supported. On the downlink in particular, the network receives regular reports from each active mobile on the current "channel quality" (which I'll define in a moment), and uses that (and other) information to determine the specific transmission format to be used to send data to those devices. These reports are typically sent every few milliseconds, allowing the network to fine-tune the transmissions under rapidly changing conditions.

"Channel quality," in this context, is not some number indicating how "good" the radio channel is. "Good" channel quality does not necessarily mean that the downlink signal is strong, or that interference levels are low, or that pilot pollution is under control, although certainly all of these measures could factor into the decision. Instead, "good" really means that the mobile believes that, under the current conditions, it could reliably receive a high data rate over the radio link. That's not to say that it actually would receive a high data rate, just that it could if the network decided to try.

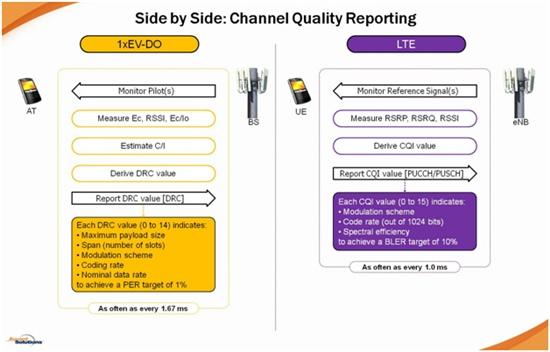

In the specific case of 1xEV-DO and LTE, active ATs and UEs continuously measure a common reference signal on the downlink, and use that information to estimate what the corresponding high-speed data channel would look like under the same circumstances. This estimate is in turn encoded into a number that is transmitted back to the network: the Data Rate Control value (or DRC) for 1xEV-DO, or the Channel Quality Indication (CQI) for LTE.

Interestingly enough, the relevant standards for channel quality reporting do not dictate exactly how each mobile is to come up with its channel quality estimate. All that is required is that the results meet the minimum error requirements for the technology. This approach gives the mobile chipset vendors an opportunity to develop proprietary algorithms that attempt to give the vendor an edge in cost, reliability and performance against their competitors.

The basic process for estimating and reporting channel quality in 1xEV-DO and LTE networks is illustrated here:

Although it might seem to make more sense to measure the actual downlink data channels in order to come up with the quality estimate, data channels are shared amongst the active users, and the transmission formats and power levels can vary wildly moment by moment; in fact, the data channels may be turned off entirely for periods of time, if there is no data to be sent. To avoid these challenges, both technologies base their channel estimates on the steady and predictable reference channels: the Pilot channel in 1xEV-DO, and the embedded Reference Signals in LTE. (Note that LTE does define a method for the UE to estimate the Energy per Resource Element, or EPRE, of the data channel based on the EPRE of the reference signals.)

Since the quality estimation algorithms are proprietary, there's not much public information available on exactly what the mobiles are actually measuring. Typical inputs may include the signal strength of the pilot (Ec or RSRP), the total downlink signal across the entire channel (RSSI), and some assessment of interference levels (Ec/Io or RSRQ). In the specific case of 1xEV-DO, I believe that most devices will derive a carrier-to-interference (C/I) ratio as part of the algorithm, since that tends to correlate well with the ability of the AT to decode downlink data.

In any event, both the AT and the UE process the measurements and determine the fastest transmission format each is capable of correctly decoding at that time. That format is then matched to one of a set of possible values defined in the standards, ensuring that both the mobile and the network interpret the value in the same way.

- In 1xEV-DO Rev. A, the DRC value ranges from 0 to 14, with 0 indicating very poor conditions (0 kbps) and 14 indicating excellent conditions (corresponding to 3.07 Mbps). Higher DRC values indicate that the AT can support higher data rates, with the exception of DRC 13, which for reasons of backwards compatibility actually falls between DRC 10 and DRC 11.

- In LTE, the CQI value ranges from 0 to 15, again with 0 indicating very poor conditions (0 kbps) and 15 indicating excellent conditions. Because the data rate in LTE also depends on the number of resource blocks being allocated and other factors, there is no specific data rate value associated with CQI 15. Note that the UE may report multiple CQI values for different subbands and antennas.

Every value corresponds to a particular set of transmission parameters, which in turn dictate how many bits the mobile may receive in each transmission. DRC values identify the maximum payload size, the number of slots the transmission will take (the span), and the modulation and coding scheme that will be used. CQI values identify the modulation scheme, the coding rate and the nominal spectral efficiency, which then determines how many bits each allocated resource block can carry.

An AT can report the DRC value as often as once per slot (every 1.67 ms), although most systems are configured to repeat the same DRC value 4 times in a row to improve reliability. Similarly, a UE can report the CQI value as often as once per subframe (every 1 ms), although again common practice will likely slow down that rate somewhat.

Note that these two technologies are designed to achieve very different error rates on their data transmissions: 1xEV-DO is expected to meet a Packet Error Rate (PER) of 1% or less, while LTE aims for a Block Error Rate (BLER) of 10% or less. Although both systems have multiple methods for error recovery, the more stringent requirements for 1xEV-DO means that ATs tend to be more conservative in their channel quality estimates, trading off somewhat lower data rates for improved efficiency and delay.

By having the mobile devices provide real-time channel quality estimates, both 1xEV-DO and LTE networks can drive the downlink data channels at the highest possible data rate. This may not be the maximum data rate that the technology is capable of delivering, but it should match what the mobile is actually capable of receiving at that moment.